Project Westdrive

Visit projectVirtual reality project simulating self-driving car rides to study public trust and behavioral responses using immersive environments and voice assistant systems.

A Virtual City for Autonomous Driving and Human Behavior Research in VR

View the Data Analysis Repository →

Problem

Autonomous vehicles promise safer, cleaner, and more efficient mobility. Yet, despite widespread awareness of these benefits, public trust in self-driving cars remains critically low.

Surveys consistently reveal that the public’s hesitation stems not from misunderstanding the advantages, but from fear of malfunction and a lack of transparency—a deeply human response to opaque automation.

Project Westdrive set out to tackle this challenge by simulating what trust, anxiety, and comprehension look like in practice. Instead of asking people how much they trust AI, we put them inside it—placing participants into a self-driving car in a virtual city where the AI’s decisions could be observed, heard, and experienced.

Approach

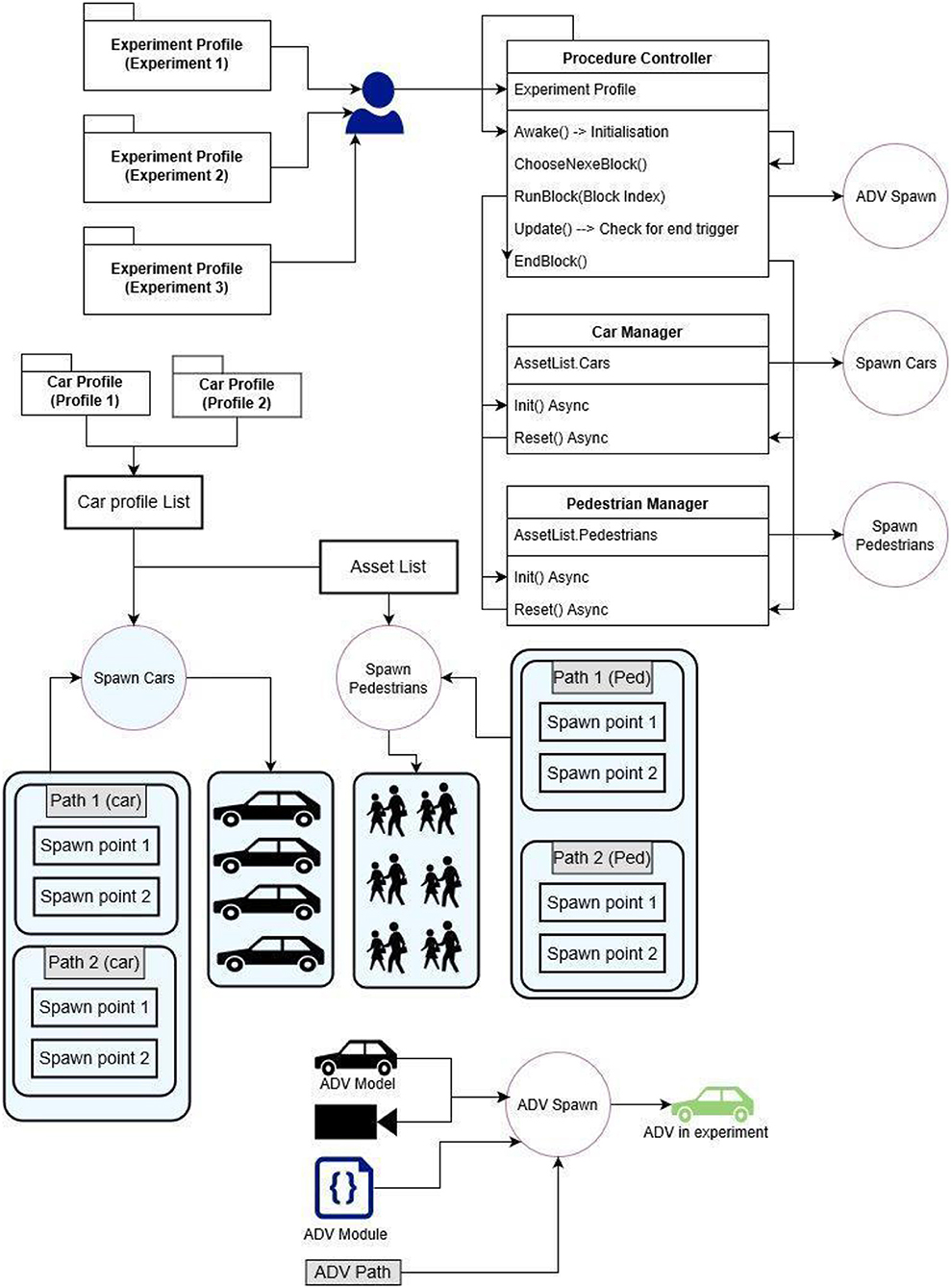

At its core, Westdrive is a large-scale, open-source virtual city built in Unity3D (C#) designed for behavioral and cognitive research. It spans 230 hectares and hosts over 150 autonomous vehicles, 650 pedestrians, and tens of thousands of static and dynamic elements—rendered with realism and optimized for stable, reproducible experiments in VR.

The experiment explored three driving conditions to study the role of explainable AI and anthropomorphic communication in shaping user trust:

- Autonomous Vehicle with Voice Assistant

The vehicle explained its decisions in real time (e.g., “Slowing down—pedestrian detected at crosswalk”). - Autonomous Vehicle without Feedback

Participants heard a neutral podcast instead, keeping auditory engagement identical but removing transparency. - Human Driver Control Condition

The vehicle simulated a taxi ride with naturalistic human narration and reactions.

Each participant experienced a 90-second journey through a dense urban scene containing unpredictable, semi-critical events—pedestrians crossing, vehicles merging, lights changing—designed to probe reactions and emotional responses.

At the end, participants completed a short Technology Acceptance Model (TAM) questionnaire assessing trust, perceived safety, ease of use, and intention to adopt.

Head orientation and position data were simultaneously recorded at 40 Hz, providing a proxy for gaze behavior, vigilance, and engagement.

Infrastructure & Exhibition

Westdrive wasn’t just a lab experiment—it became a mobile interactive exhibit for the public.

It was deployed at two major venues:

- MS Wissenschaft 2018, the floating science exhibition ship of the German Ministry of Research and Education (BMBF), traveling across Germany and Austria.

- BMBF Headquarters Exhibition, Berlin, for extended public engagement.

Physical Setup

- A half VW Golf cockpit with dual HTC Vive Pro headsets (driver and passenger view).

- Lighthouses embedded into the dashboard for permanent calibration.

- Two Raspberry Pi–powered questionnaire terminals, each hosting a short-code survey system connected to a MariaDB server on a local Ubuntu host.

- A large rear display teaching visitors about AI, neural networks, and mobility futures.

- Automated data backup and VPN monitoring, allowing 24/7 operation without on-site supervision.

This setup collected over 26,000 behavioral recordings and 8,000 questionnaire responses during the exhibition’s run. Each participant’s VR data—car position, orientation, gaze—were serialized in binary for speed, replayed offline through ray casting to estimate gaze vectors, and exported to CSV for statistical analysis.

Results

The findings, later published in Frontiers in Virtual Reality and Frontiers in ICT, highlighted both the promise and limits of explainable AI:

- Voice-assisted explanations increased trust and perceived transparency compared to silent AI.

- Behavioral indicators such as head motion, fixation duration, and gaze entropy served as reliable proxies for trust and cognitive engagement.

- Yet, greater trust did not automatically lead to higher willingness to use self-driving vehicles—a clear reminder that trust and adoption are distinct psychological processes.

- Gender and demographic differences emerged strongly: female participants reported lower perceived ease of use but often higher critical awareness of automation.

The combination of subjective self-reports and objective behavioral metrics revealed the subtle dynamics of human–AI interaction—how cognitive understanding, emotional comfort, and perceived control intertwine when humans confront autonomous systems.

My Role

- Conceived the research question and experimental logic

- Developed the Westdrive VR environment in Unity3D (C#), including modular city design and AI-driven dynamic entities

- Configured hardware and network infrastructure, including Ubuntu servers, VPN, and database systems

- Designed and tested the data pipeline for behavioral and questionnaire collection

- Conducted pilot testing and participant supervision

- Performed data analysis using Python (pandas, statsmodels, MANOVA, regression models)

- Authored and co-authored scientific papers reporting the project results

- Ensured GDPR-compliant data handling, anonymization, and secure backups

Open-Source Repositories

🧠 Simulation Environment

Westdrive City Simulation (Unity3D Project)

Complete Unity environment including scripts, prefabs, City AI toolkit, and reproducible scenes.

📊 Data Analysis & Results

MS Wissenschaft Data Analysis Repository

Includes statistical analysis scripts, gaze data reconstruction, and TAM evaluation methods.

Publications

-

Nezami, F. N., Wächter, M. A., Pipa, G., & König, P. (2020).

Project Westdrive: Unity City With Self-Driving Cars and Pedestrians for Virtual Reality Studies.

Frontiers in ICT, 7(1), 1. https://doi.org/10.3389/fict.2020.00001 -

Wächter, M. A., Nezami, F. N., Schüler, T., Pipa, G., & König, P. (2020).

Talking Cars, Doubtful Users – A Population Study in Virtual Reality.

Frontiers in Virtual Reality, 1, 1. https://doi.org/10.3389/frvir.2020.00001

Keywords: Virtual reality, autonomous driving, explainable AI, human–machine interaction, trust in automation, ethical cognition, behavioral modeling, open-source simulation