ErgoVR

Virtual reality research investigating decision-making and action planning using puzzles and tool interaction tasks with eye tracking and grasp data.

Investigating Human Decision-Making and Action Planning in Virtual Reality

Overview

Project ErgoVR explores how humans plan and execute complex actions in realistic, interactive environments. Building on the cognitive framework of embodied decision making, we used immersive virtual reality (VR) to study how gaze, hand movements, and cognitive load interact during problem solving and tool use.

Problem

Traditional experiments on decision making and action planning often rely on simplified, static tasks. However, real-world cognition involves dynamic visual search, physical interaction, and moment-to-moment planning under uncertainty.

The goal of Project ErgoVR was to uncover how humans coordinate eye, head, and hand movements while planning and executing actions—especially when tasks require on-the-fly decisions rather than following learned scripts.

Approach

We developed two complementary VR paradigms:

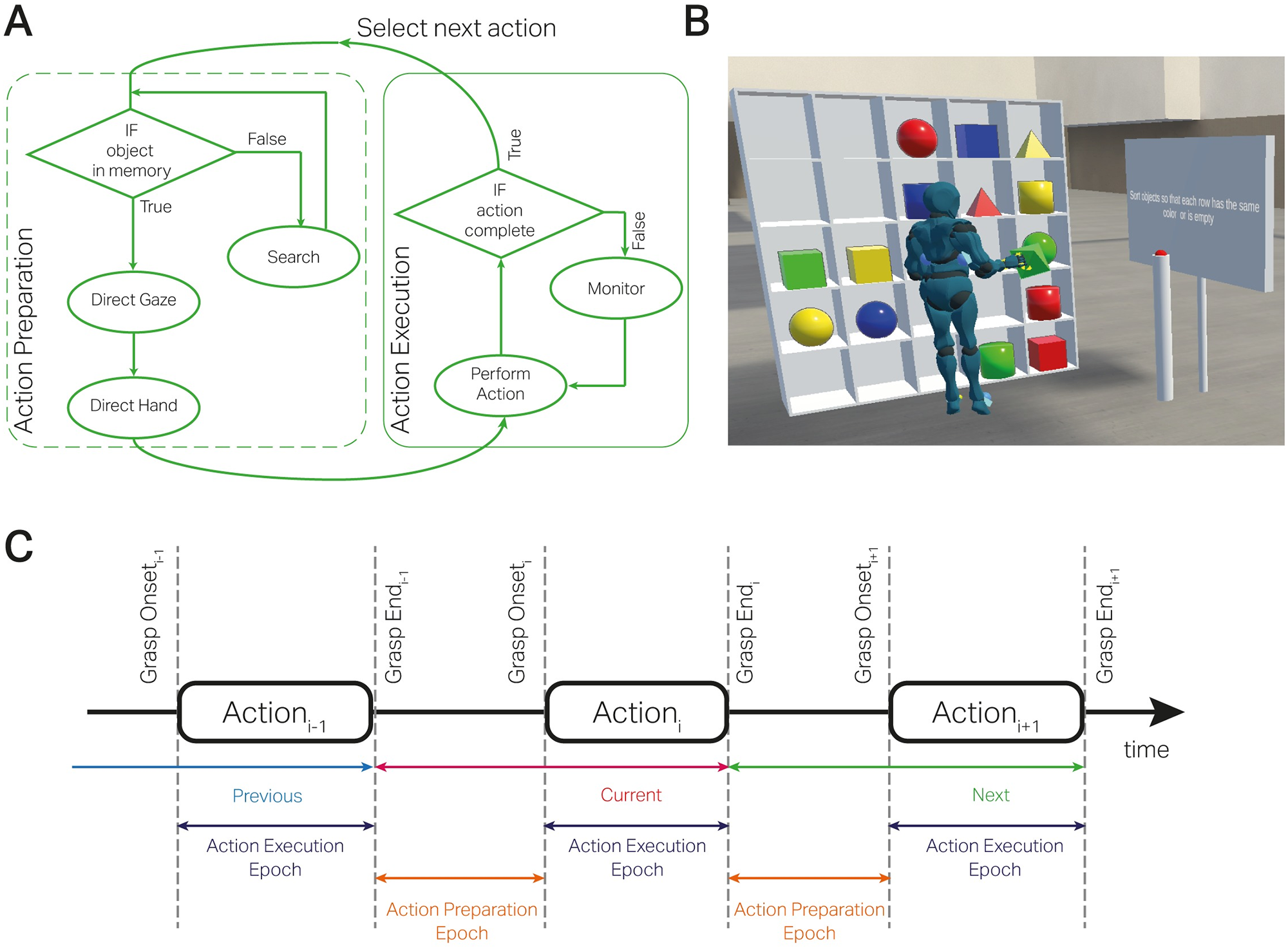

1. VR Sudoku Task

Participants solved a Sudoku-like puzzle by manipulating colored 3D shapes across three difficulty levels.

We recorded:

- Eye-tracking (gaze position and fixations)

- Head orientation

- Hand movements and grasp actions

This design enabled analysis of the planning phase (before grasp) and execution phase (during grasp), revealing how visual attention precedes and accompanies motor actions.

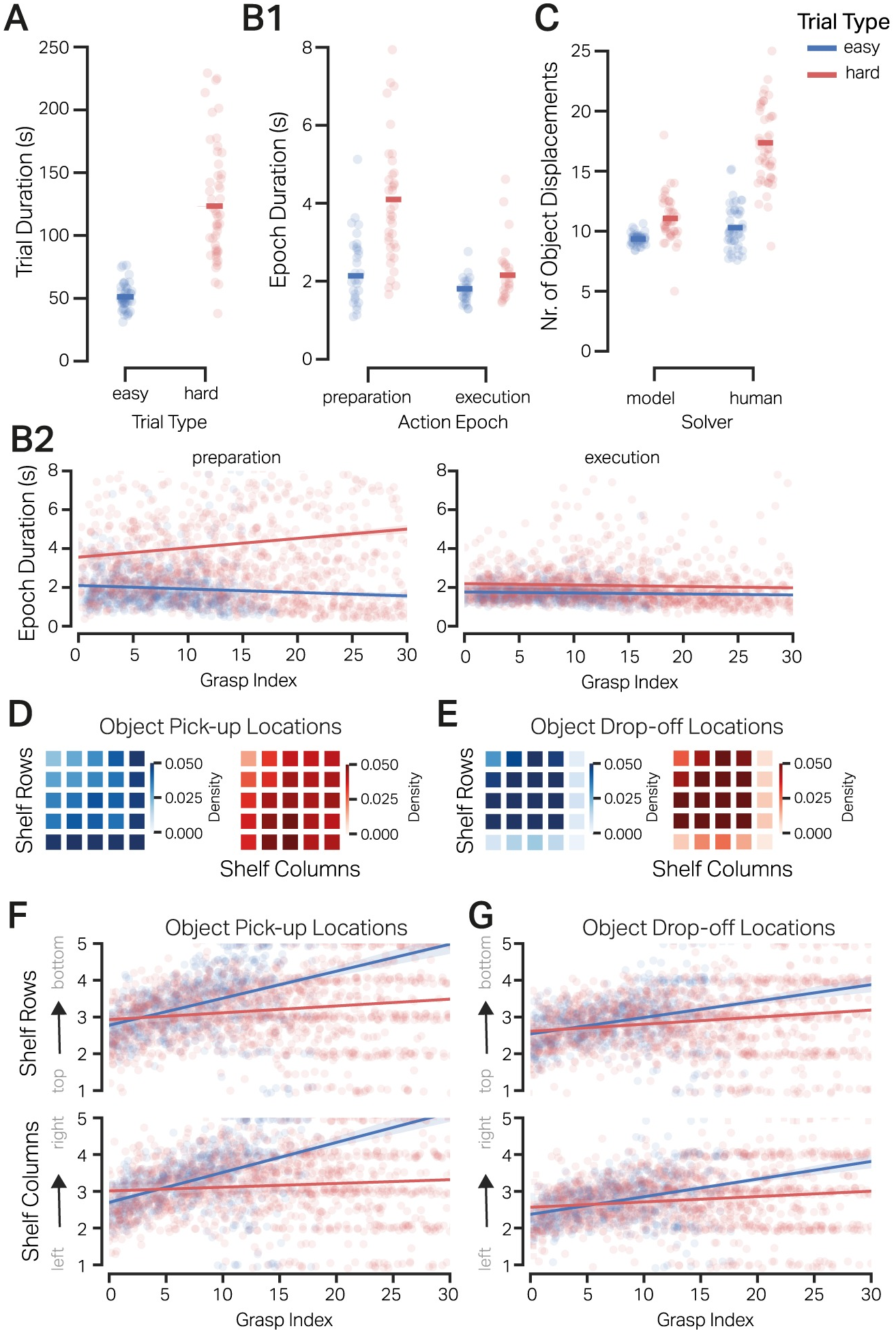

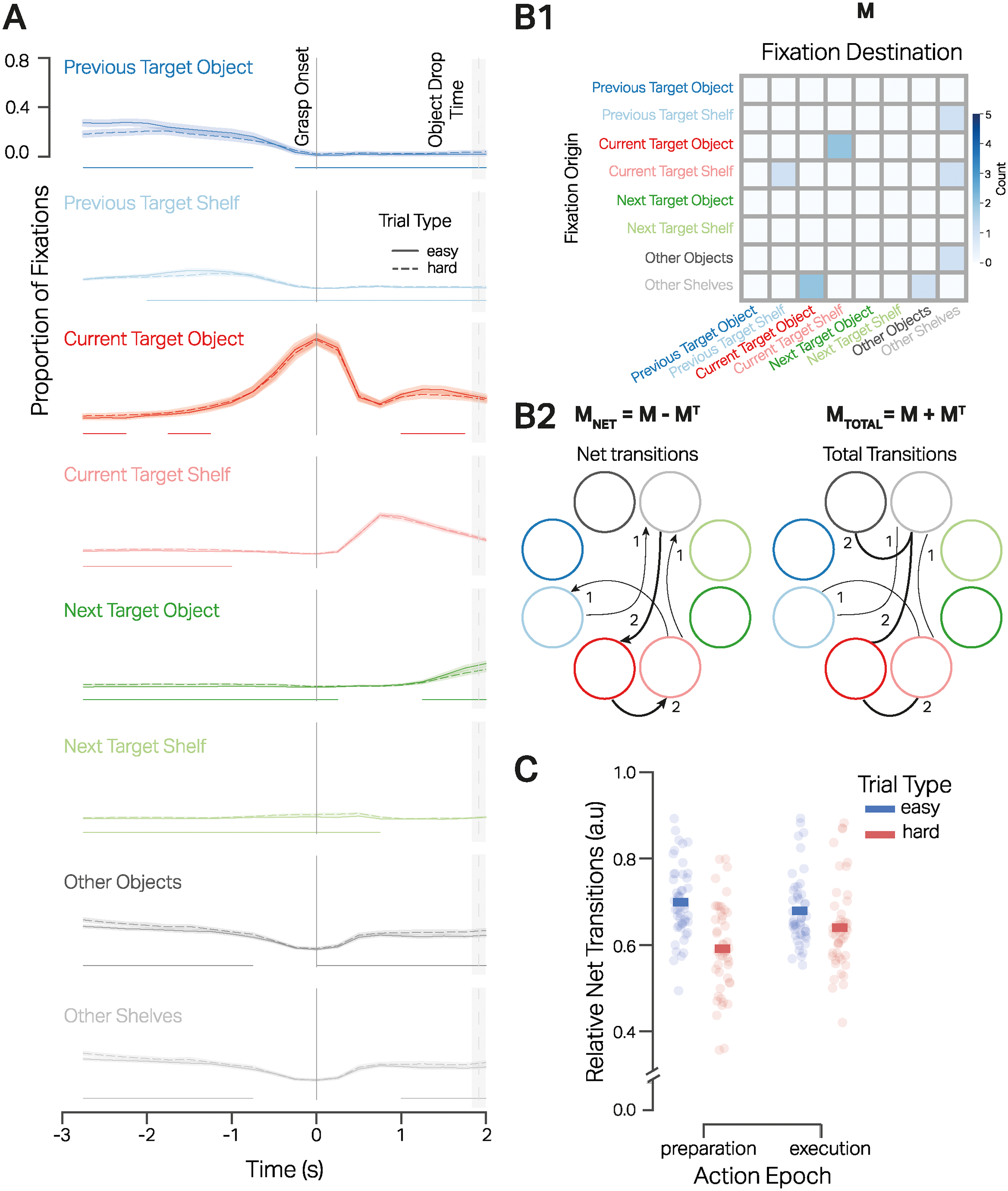

Findings (Keshava et al., 2024, PLOS Computational Biology)

Data showed that participants primarily employed just-in-time gaze strategies—fixating on the next action target shortly before acting, rather than pre-planning long sequences. Under higher cognitive load, participants’ preparation times and gaze entropy increased, and their behavior deviated from optimal strategies predicted by computational models. These results demonstrate how humans offload planning demands onto the environment, favoring adaptive, frugal use of working memory.

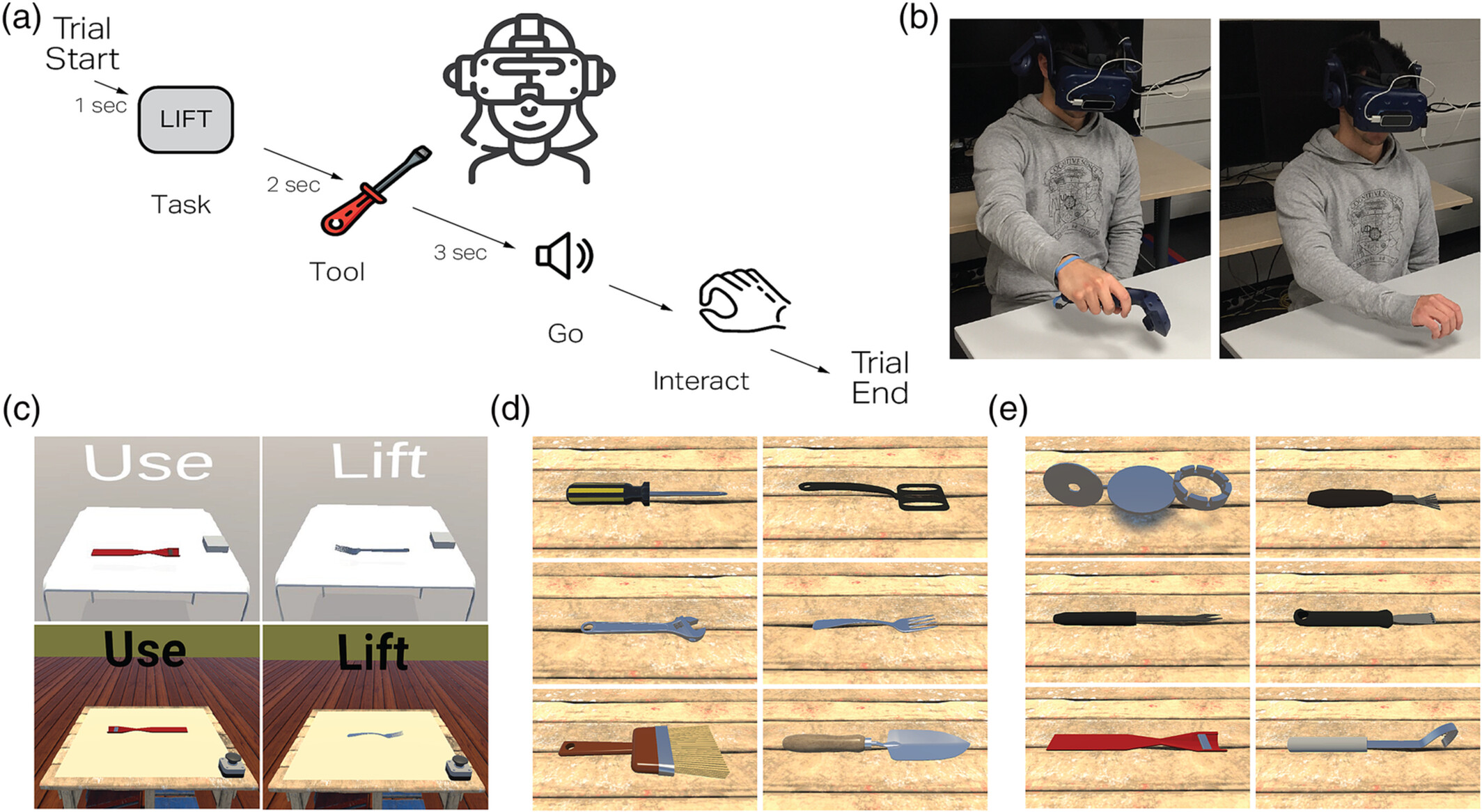

2. Tool Interaction Task

In a second experiment, participants interacted with virtual tools varying in familiarity (e.g., hammer vs. lemon zester) and handle orientation (left/right), and were asked to either lift or use them.

The experiments were run under two realism levels:

- Controller-based (low realism) interaction with HTC Vive

- Hand-tracked (high realism) interaction using Leap Motion

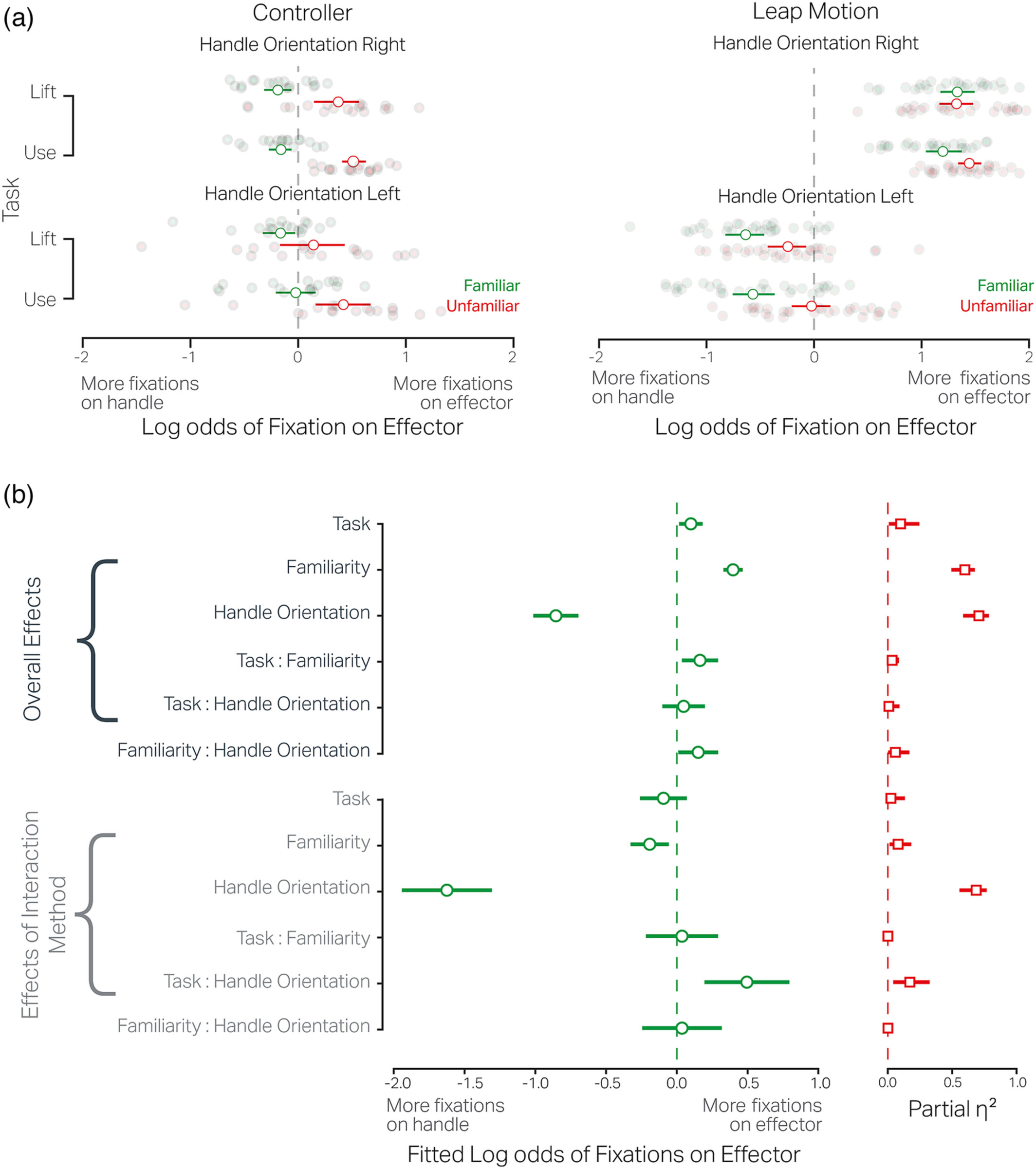

Findings (Keshava et al., 2023, European Journal of Neuroscience)

Anticipatory gaze behavior differed with tool familiarity and interaction realism. Participants fixated more on tool effectors when preparing to use unfamiliar tools, indicating active inference of mechanical function. In high-realism settings, gaze shifted toward tool handles when orientation conflicted with handedness, revealing proximal goal planning for grasping. The study highlights how realism and embodiment modulate predictive gaze control and action affordances.

Results Summary

- Just-in-time planning: Gaze precedes manual action by milliseconds, optimizing resource use.

- Cognitive load effects: Higher difficulty increases visual search and prolongs preparation.

- Action affordances: Tool familiarity and realism shape the anticipatory allocation of attention.

- Hierarchical planning: Distal (goal-oriented) and proximal (grasp-oriented) fixations emerge based on context.

Together, these findings advance our understanding of embodied decision-making, supporting theories of active inference and embodied cognition.

My Role

- Assisted in designing and implementing the VR experiments

- Analyzed multimodal data (eye, hand, head tracking)

- Visualized gaze trajectories and action sequences in VR post-processing

- Supervised and supported the development of the tool interaction experiment

- Contributed to the interpretation of results linking gaze strategies to planning and affordance mechanisms

References

-

Keshava, A., Nosrat Nezami, F., Neumann, H., Izdebski, K., Schüler, T., & König, P. (2024). Just-in-time: Gaze guidance in natural behavior. PLOS Computational Biology, 20(10), e1012529. https://doi.org/10.1371/journal.pcbi.1012529

-

Keshava, A., Gottschewsky, N., Nosrat Nezami, F., Schüler, T., & König, P. (2023). Action affordance affects proximal and distal goal-oriented planning. European Journal of Neuroscience, 57(9), 1546–1560. https://doi.org/10.1111/ejn.15963

Keywords: Eye–hand coordination, cognitive planning, VR experiments, action affordance, active inference, embodied cognition